Years of Suboptimal Model Training? Understanding Gradient Accumulation

When it comes to fine-tuning large language models (LLMs) locally, many of us face a common roadblock: the hefty GPU memory demands of large batch sizes. So, how do we tackle this without breaking the bank on hardware? Enter gradient accumulation, a clever workaround that has become a go-to technique in the world of AI.

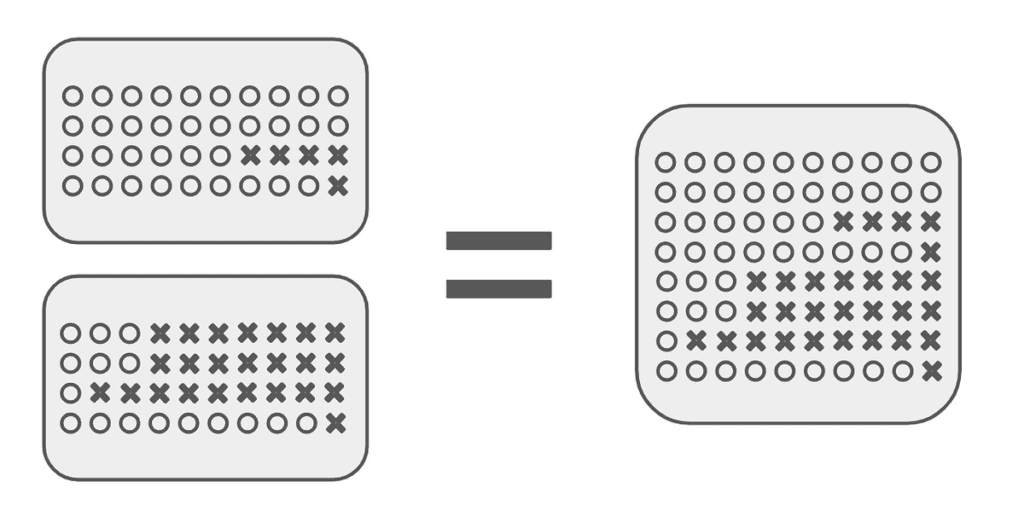

Gradient accumulation allows us to simulate larger batch sizes by summing the gradients from several smaller mini-batches before updating the model’s weights. Instead of the typical model update after each mini-batch, we gather data from multiple iterations, making it feel like we’re training on a larger batch without the memory load. Picture this: if you set a mini-batch size of just 1 and accumulate gradients over 32 mini-batches, you’d effectively train as if you had a full batch size of 32—sounds great, right?

However, not everything about gradient accumulation is rosy. I’ve personally noticed that using this method often leads to performance issues compared to training with larger, actual batch sizes in popular deep-learning frameworks, such as Transformers. It seems that this approach can leave a lot to be desired.

After sharing my findings on social media platforms like X and Reddit, I was thrilled to see that others were experiencing similar challenges. Daniel Han from Unsloth AI took the plunge and replicated the issue, uncovering that the performance degradation wasn’t just limited to gradient accumulation. It was also affecting multi-GPU setups. That’s a huge red flag for those of us deeply involved in AI development!

So, what does this mean for the future of AI model training? It suggests that our traditional methods may not be as reliable as we think, especially when we’re pushing the boundaries of what LLMs can achieve. It’s essential to explore these techniques further and tweak our approaches to ensure we’re getting the best out of our models. After all, the world of artificial intelligence is ever-evolving, and staying ahead of the curve requires constant learning and adaptation.

As we navigate through these challenges, it’s vital to keep an open dialogue about our experiences and solutions. Sharing knowledge helps us all climb the steep learning curve of AI together. The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.