The surge in artificial intelligence (AI) is creating an unprecedented demand for data centers, as companies scramble to process ever-increasing volumes of data. This fast-paced race among tech giants to expand their infrastructures for AI workloads is accompanied by a pressing challenge: how to power these expansive operations sustainably and affordably. Some companies, like Oracle and Microsoft, have even begun to explore nuclear energy as a potential solution.

Beyond energy supply, another major concern is the heat generated by powerful AI hardware. Enter liquid cooling—an innovative approach that has gained traction as an effective method for maintaining optimal system performance amidst rising energy demands. A notable trend emerged in October 2024, with numerous tech firms unveiling their liquid-cooled solutions, signaling a significant industry pivot.

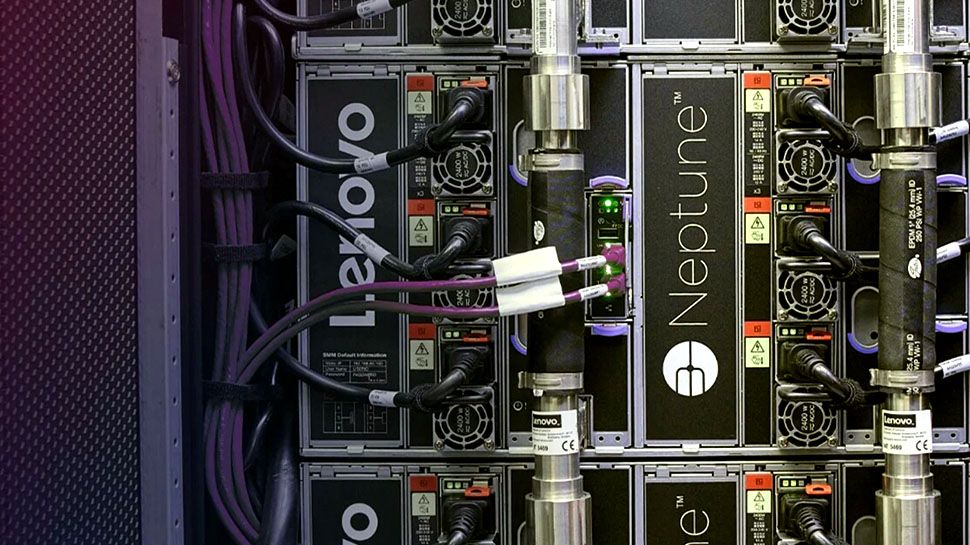

Liquid-Cooled SuperClusters

During the recent Lenovo Tech World event, the company unveiled its next-generation Neptune liquid cooling solution, designed specifically for servers. The sixth iteration of Neptune incorporates open-loop, direct warm-water cooling, which is now being rolled out across Lenovo’s partner ecosystem. This innovative solution allows organizations to harness accelerated computing for generative AI while slashing data center power consumption by up to 40%.

At the OCP Global Summit 2024, Giga Computing, a subsidiary of Gigabyte, introduced a direct liquid cooling (DLC) server tailored for Nvidia’s HGX H200 systems. Alongside the DLC server, Giga showcased the G593-SD1, equipped with a dedicated air cooling chamber for the Nvidia H200 Tensor Core GPU, catering to data centers that aren’t yet prepared to transition fully to liquid cooling.

Dell has also entered the liquid cooling arena with its new Integrated Rack 7000 (IR7000), engineered to handle future deployments of up to 480KW while efficiently capturing nearly all generated heat. Arthur Lewis, president of Dell’s Infrastructure Solutions Group, emphasized, “Today’s data centers can’t keep up with the demands of AI. We need high-density compute and liquid cooling innovations, with modular, flexible, and efficient designs.” These advancements are essential for organizations striving to remain competitive in the fast-paced AI landscape.

Meanwhile, Supermicro has unveiled its own line of liquid-cooled SuperClusters specifically designed for AI workloads, running on the Nvidia Blackwell platform. Supermicro’s liquid cooling solutions, which utilize the Nvidia GB200 NVL72 platform meant for exascale computing, are currently being sampled by select customers, with full-scale production anticipated for late Q4.

“We’re driving the future of sustainable AI computing,” said Charles Liang, president and CEO of Supermicro. “Our liquid-cooled AI solutions are being rapidly adopted by ambitious AI infrastructure projects globally, with over 2,000 liquid-cooled racks shipped since June 2024.” These cutting-edge SuperClusters boast advanced in-rack or in-row coolant distribution units (CDUs) alongside specialized cold plates designed for housing dual Nvidia GB200 Grace Blackwell Superchips in a compact 1U form factor.

Clearly, liquid cooling technology is poised to take center stage in data center operations as workloads multiply. Its role in efficiently managing heat and energy demands is becoming increasingly vital for the next generation of AI computing. The potential implications for efficiency, scalability, and sustainability are fascinating—and we’re just beginning to scratch the surface.

More from TechRadar Pro

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.