Unlocking Trustworthy AI: Microsoft’s New Initiatives for Security, Safety, and Privacy

As artificial intelligence continues to carve out its role in our daily lives, the importance of developing robust, trustworthy AI systems becomes ever clearer. Microsoft is at the forefront of this movement, making it their mission to ensure that AI technologies are not just innovative but also secure, safe, and respectful of privacy.

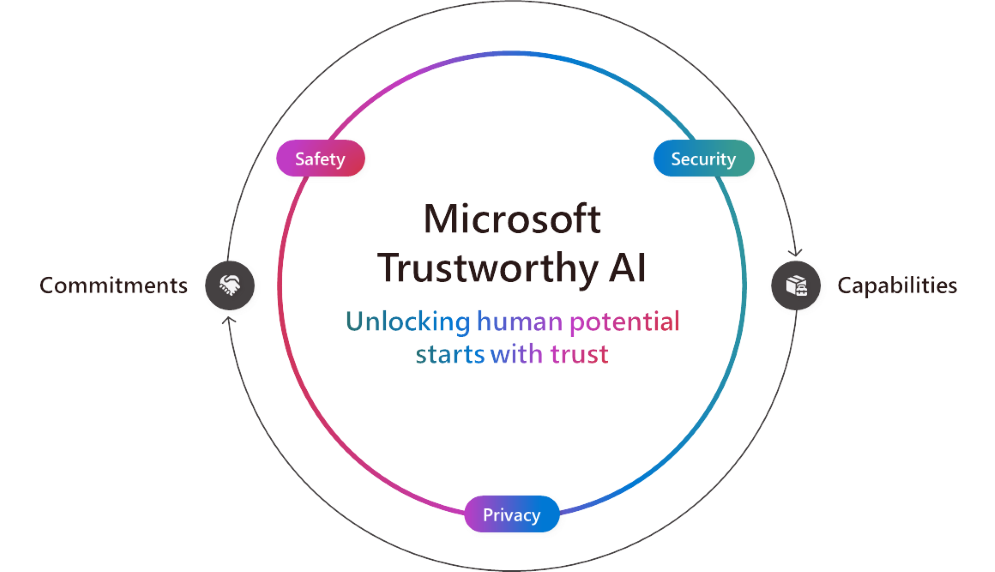

Trustworthy AI: A Collective Responsibility

Microsoft firmly believes that creating impactful AI isn’t solely the remit of tech companies; it’s a collective responsibility involving organizations and communities around the globe. To this end, they are enhancing their commitment to Trustworthy AI with new product capabilities that cater specifically to security, safety, and privacy.

Prioritizing Security with the Secure Future Initiative

At the core of Microsoft’s strategy is the Secure Future Initiative (SFI), which aims to bolster security protocols across all levels of their systems. The recently published SFI Progress Report reveals significant strides in enhancing culture, governance, technology, and operations within the Microsoft ecosystem. Their approach is grounded in three guiding principles—secure by design, secure by default, and secure operations.

Recently introduced features, such as evaluations in Azure AI Studio for proactive risk assessments and enhanced transparency for Microsoft 365 Copilot web queries, highlight how Microsoft is prioritizing security for its users. Take Cummins, a trusted engine manufacturer, for example: by utilizing Microsoft Purview, they automated data classification and tagging to bolster their data governance and security.

Ensuring AI Safety

Safety in AI encapsulates both security and privacy. Microsoft’s Responsible AI principles, active since 2018, dictate how they develop and deploy AI. These principles aim to prevent harmful outcomes, such as bias or the dissemination of harmful content. The company has heavily invested in policies and tools that allow organizations to build and implement AI responsibly.

New offerings include:

- Correction Capability: This feature in Azure AI Content Safety helps detect and rectify inaccuracies in real-time, ensuring users receive reliable information.

- Embedded Content Safety: Designed for on-device scenarios, this feature aids in maintaining content safety even in instances of intermittent cloud connectivity.

- Protected Material Detection for Code: In preview, this tool supports developers in identifying protected content within public code repositories, fostering transparency and collaboration.

Companies like Unity and ASOS are already leveraging these features to create trustworthy AI applications, enhancing their software’s responsible use and user interactions.

A Strong Commitment to Privacy

Data is the foundation of AI, making privacy a top priority for Microsoft. Their long-standing privacy principles emphasize user control, transparency, and adherence to legal regulations. The company recently announced advancements aimed at ensuring compliance while fostering the innovative capabilities that AI offers.

Notable privacy enhancements include:

- Confidential Inferencing in the Azure OpenAI Service Whisper model, which keeps sensitive customer data secure during predictive processes.

- The general availability of Azure Confidential VMs equipped with NVIDIA H100 Tensor Core GPUs, bolstering data security directly on high-performance infrastructures.

- Upcoming Azure OpenAI Data Zones will simplify data management for AI applications across the US and EU, allowing companies to maintain stringent control over data handling.

These advancements are met with enthusiasm from customers like the Royal Bank of Canada, which is using Azure confidential computing to analyze encrypted data while maintaining customer privacy.

Building Trustworthy AI Together

The call for more trustworthy AI resonates across various sectors. In education, partnerships like those between Microsoft and New York City Public Schools are leading the charge for safe AI utilization in classrooms. Programs like EdChat are reshaping educational experiences by integrating generative AI while ensuring student safety.

With fresh capabilities focusing on security, safety, and privacy, Microsoft empowers organizations to harness the potential of trustworthy AI confidently. Their holistic approach not only advances their broad mission but also contributes to a more empowered, understanding, and sustainable future for AI technology.

Conclusion

As AI continues to evolve, Microsoft’s dedicated advancements in Trustworthy AI set a benchmark for the industry. By prioritizing security, safety, and privacy, and empowering customers across sectors, Microsoft stands as a pillar of responsible AI development.

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.