Large Language Models in Production

Exploring Deployment Options for Enhanced Performance

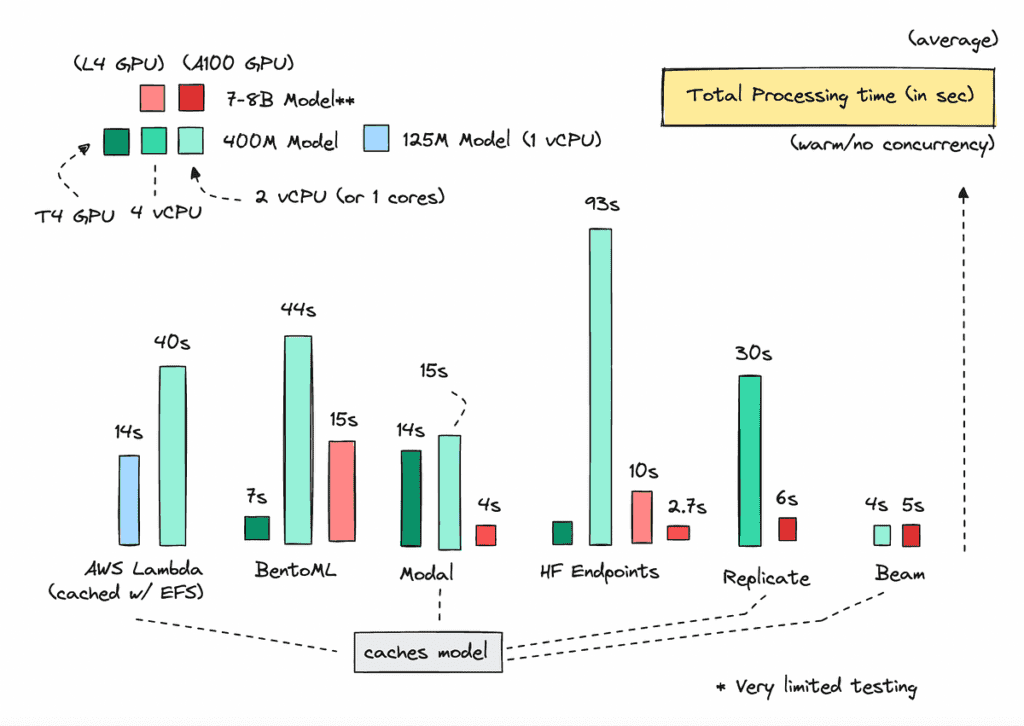

If you’ve been dabbling with open-source models, you might be wondering: what’s the smartest way to roll them out? With so many choices available, especially when it comes to cloud services, it’s crucial to weigh your options carefully. The differences can often come down to pricing, performance, and ease of use.

Evaluating Your Options

Now, let’s address the elephant in the room: should you go with an established giant like AWS, or consider newer platforms such as Modal, BentoML, Replicate, Hugging Face Endpoints, and Beam? Each has its strengths and weaknesses, and understanding these can save you both time and money in the long run.

Key Metrics to Consider

When assessing these platforms, think about several important metrics:

- Processing Time: How fast can the models infer results? This will significantly impact user experience.

- Cold Start Delay: Are you waiting ages for the system to warm up, or can it keep up with user demand?

- Cost Analysis: Compare CPU, memory, and GPU costs. You want a solution that fits your budget without compromising quality.

- Ease of Deployment: User-friendliness can’t be overlooked. A platform that is easy to set up will allow you to focus more on development rather than troubleshooting.

Real-world Insights

Let’s take a moment to consider a real-life scenario. Imagine you’ve created an AI model to help tourists navigate through bustling local markets. You settle on deploying it through a serverless approach. Initially, you opt for AWS, drawn by its brand recognition. However, after experiencing long cold start times, you decide to experiment with alternatives like Hugging Face. The change sees immediate improvements in processing time—much to the delight of your users.

A Personal Touch

From my experience, experimenting with various platforms can often lead to surprising results. I started out with a simple model on AWS, only to find that a niche provider offered similar capabilities at a fraction of the cost, with improved response times. This opened my eyes to the fast-evolving landscape of AI deployment options.

Summing It Up

As you navigate this journey of deploying large language models, keep an eye on not just the metrics but also the developer experience and community support surrounding these tools. Platforms with vibrant communities often provide quicker solutions to problems and valuable peer insights that can enhance your project.

In conclusion, while established platforms like AWS might seem tempting due to their familiarity, newer players might just be the fresh breath of air you need. The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.