Unraveling Misconceptions in Online Data Science Content for Better Learning

Navigating the vast and intricate world of data science can often feel overwhelming, particularly when misinformation runs rampant online. As I journeyed through various resources to clear my doubts and learn new concepts, I stumbled upon a plethora of low-quality answers. Surprisingly, many of these answers received undue praise despite fundamentally flawed understandings. To assist fellow learners in sidestepping these pitfalls, I’ve decided to embark on a series, spotlighting common errors found in online content, including some mistakes I may have previously made.

In this initial installment, I will delve into four prevalent misconceptions about basic machine learning and statistics concepts, alongside counter-examples to debunk these errors.

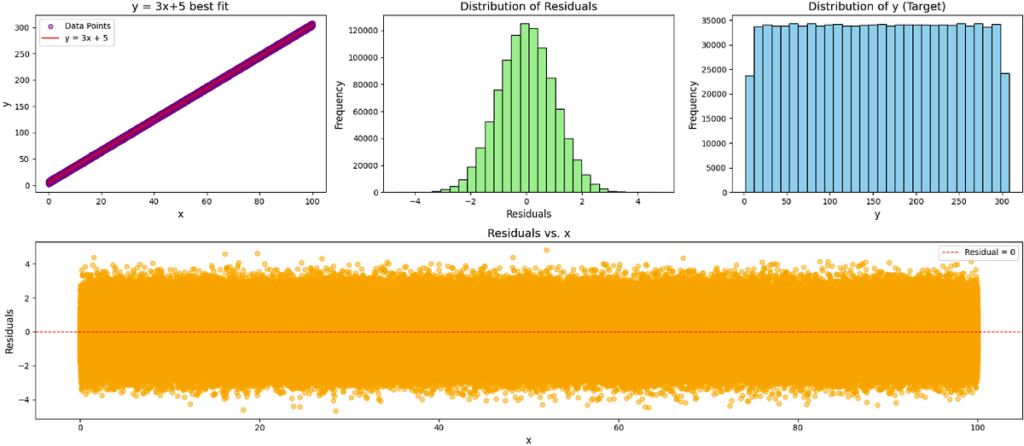

Misconception 1: The Assumption of Normality in Linear Regression

One widespread claim is that "in Linear Regression (LR), one of the assumptions is the target Y conditional on X must be normally distributed." While this notion has a grain of truth, it’s crucial to clarify. The assumption isn’t that the target variable Y must be normally distributed in all scenarios but rather that the residuals (errors) of the model should ideally follow a normal distribution. This distinction is crucial for model evaluation and ensuring accurate inference.

Misconception 2: All Machine Learning Models Require Large Datasets

A common belief is that "all machine learning models require large datasets." Although many advanced models thrive on massive datasets, it’s important to recognize that smaller datasets can yield significant insights, especially when employing simpler models or leveraging techniques like transfer learning. For instance, if you’re using a basic linear regression model, a smaller, well-curated dataset can often suffice, helping you make valid predictions without needing vast amounts of data.

Misconception 3: Overfitting Means You Can’t Use the Model

Another frequent misunderstanding is that "if a model is overfitting, it is entirely unusable." While overfitting—where a model learns noise in the training data rather than the underlying distribution—can undermine a model’s predictive power on unseen data, it doesn’t render the model entirely useless. Techniques like cross-validation, regularization, and pruning can help manage overfitting, allowing for a more robust application of the model.

Misconception 4: Correlation Implies Causation

Lastly, many believe that "correlation implies causation." This age-old adage often leads to significant misunderstandings in data interpretation. While two variables may exhibit a correlation, it doesn’t necessarily mean one causes the other. For instance, a city with more ice cream shops might also see an uptick in drowning incidents during summer, but it would be erroneous to conclude that ice cream shops cause drownings. Understanding the difference between correlation and causation is imperative in drawing appropriate conclusions from data.

Conclusion

As we explore the complexities of data science, debunking misconceptions is vital for effective learning. The journey towards mastering these concepts is filled with twists and turns, but by staying informed and vigilant, we can enhance our understanding and navigate through potential pitfalls.

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.