DeepSeek’s Data Breach: A Wake-Up Call for AI Security

By Ravie Lakshmanan | January 30, 2025

In the fast-paced world of artificial intelligence, the buzz around innovative startups often comes with a shadow of risk. The recent case of DeepSeek, a rapidly growing Chinese AI company, has brought this concern to the forefront. Just days ago, the company left a sensitive database open on the internet, potentially allowing malicious actors access to a trove of confidential information.

What’s in the Database?

The exposed ClickHouse database, which was still accessible at several addresses, posed significant risks. According to Wiz security researcher Gal Nagli, it granted complete control over database operations and exposed over a million lines of log streams. This included chat histories, secret keys, backend details, and critical operational data like API secrets. Thankfully, after being alerted by security professionals, DeepSeek acted quickly to secure the situation.

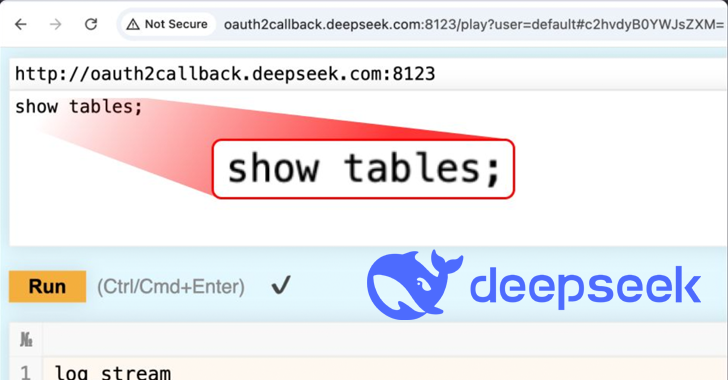

No Authentication, No Problem

The severity of the breach lies in the sheer ease with which someone could exploit it. The vulnerable database could be accessed through ClickHouse’s HTTP interface, allowing unauthorized users to run arbitrary SQL queries directly in their web browser—without needing any form of authentication. This scenario raises a chilling question: Did any bad actors take advantage of this oversight? So far, there’s no clear evidence of data theft, but the potential threat looms large.

“Rapid adoption of AI services without corresponding security is inherently risky,” Nagli stated. He emphasized the need for better collaboration between security teams and AI engineers to safeguard sensitive data. Although futuristic AI threats receive a lion’s share of concern, simple yet critical risks, like unsecured databases, may pose more immediate dangers.

The Chaotic Rise of DeepSeek

DeepSeek has garnered attention not only for its groundbreaking open-source models—dubbed by some as “AI’s Sputnik moment”—but also for its controversial practices. While its AI chatbot surged to the top of app store rankings across multiple regions, the startup became the target of significant cyber threats. Overwhelmed by malicious activity, the company temporarily paused new registrations to fortify its defenses.

In a recent update, DeepSeek assured users that they were aware of the data exposure issue and are actively working on a fix. However, the broader implications of their privacy policies, especially regarding their connections to China, have drawn scrutiny in the U.S. as a potential national security concern.

Regulatory Scrutiny and International Fallout

In Europe, the situation has escalated. Italy’s data protection regulator, the Garante, recently inquired about DeepSeek’s data handling practices, leading to the sudden unavailability of its apps in the country. Meanwhile, Ireland’s Data Protection Commission (DPC) has initiated its investigation.

Adding to the scrutiny, major tech players such as OpenAI and Microsoft are examining whether DeepSeek might have improperly used OpenAI’s API to train its models—a practice known as “distillation.” This sharing of technology secrets is a hot topic, especially as various groups in China are reportedly striving to replicate advanced U.S. AI models.

The Path Forward

As the AI landscape grows more complex, it’s clear that companies, both old and new, must prioritize security alongside innovation. Protecting customer data isn’t just a legal obligation; it’s integral to maintaining trust in these powerful technologies. The incident with DeepSeek stands as a stark reminder that in the world of AI, even the smallest oversight can have substantial repercussions.

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.