Unlock Your Potential with Retrieval-Augmented Generation in AI

Advancements in Large Language Models (LLMs) have stirred up excitement across the globe. Thanks to the launch of OpenAI’s ChatGPT in November 2022, terms like Generative AI have permeated our everyday conversations. Within a short span, LLMs have proven invaluable in various language processing tasks and even facilitated the development of autonomous AI agents. It’s a transformative moment in technology, drawing comparisons to groundbreaking developments like the internet and the light bulb. As a result, many business leaders, software developers, and entrepreneurs are eager to harness the power of LLMs.

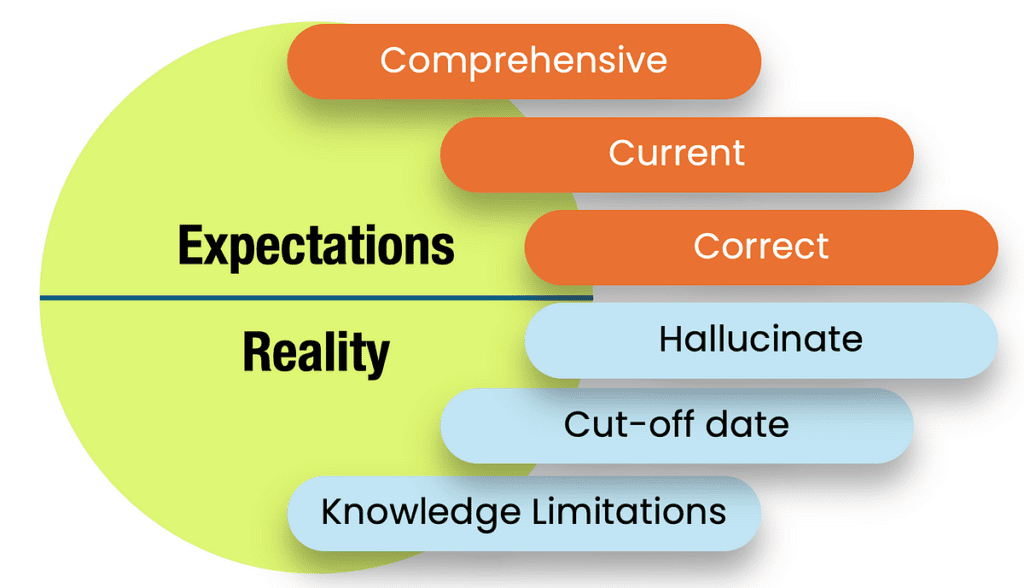

This is where Retrieval Augmented Generation, or RAG, comes into play—a game-changing technique revolutionizing the field of applied generative AI. First introduced by Lewis et al. in their pivotal work, “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks,” RAG has quickly become essential for boosting the reliability and trustworthiness of output produced by Large Language Models.

In this article, we’re diving deep into the evaluation of RAG systems, but first, let’s explore why RAG is essential and how we can set up effective RAG pipelines.

Have you ever found yourself caught in the web of information overload, unsure of which sources to trust? Picture this: you’re searching for reliable advice on starting a small business or finding the best hiking trails around Enchanted Rock in Texas. The sheer volume of information can be overwhelming! This is where Retrieval Augmented Generation shines. It combines the robustness of Large Language Models with reliable data retrieval processes to deliver answers that are not just coherent, but also dependable.

Here are some key metrics and techniques to keep in mind when elevating your RAG performance:

- Data Reliability: Ensure the databases you’re pulling data from are updated and credible—something that’s essential for sectors like healthcare or finance.

- Contextual Relevance: Optimize your retrieval methodology to ensure the responses generated fit seamlessly into the specific context of the query.

- User Feedback: Engage your users in the feedback loop. Their insights can help refine the model, making it more user-oriented.

- Continuous Testing: Regular troubleshooting and testing are crucial for identifying potential pitfalls and keeping the system robust.

As we venture further into the world of AI, RAG is proving to be a valuable ally. Think about how we’ve adapted to working from home or learning online. Reliable information retrieval will become even more integral in these ever-evolving landscapes.

Let’s not forget that this isn’t just about technology—it’s about people. The real heroes are the innovators and users adapting these systems to solve real-world challenges. Whether it’s improving customer service for local shops or streamlining processes for nonprofits in our communities, the applications are endless.

In conclusion, incorporating Retrieval Augmented Generation into your processes not only enhances the performance of LLMs but also fosters trust in AI-generated content. So why wait? Begin exploring RAG techniques today!

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.