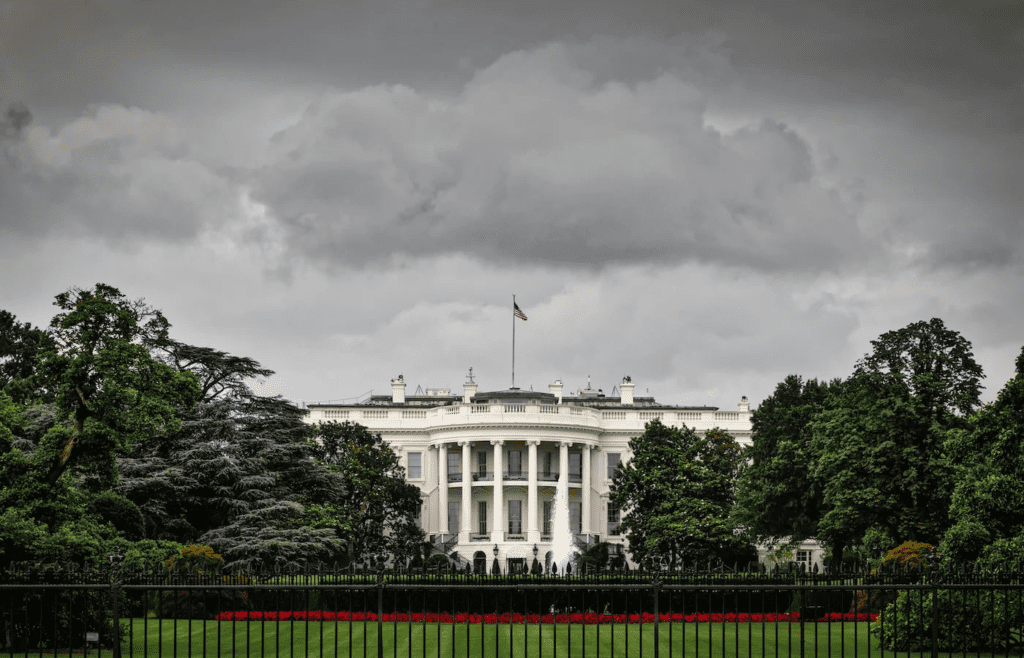

As artificial intelligence continues to leap forward at an unprecedented pace, Anthropic is calling on the White House to prioritize AI security and its far-reaching economic consequences. The company warns that without robust safeguards and monitoring, the U.S. risks falling behind in global AI competition and leaving national security vulnerabilities unaddressed.

In a world where AI stands ready to revolutionize every industry, the stakes have never been higher. Anthropic is urging the U.S. government to take decisive action that ensures the country maintains its technological leadership and effectively combats the inherent risks associated with AI advancements.

Anthropic’s AI Security Demands for the White House

In a recent communication with the White House Office of Science and Technology Policy (OSTP), Anthropic advocated for comprehensive testing protocols that evaluate AI systems for biosecurity and cybersecurity threats before they are unleashed on the market. The company’s internal assessments uncovered alarming insights, particularly regarding the Claude 3.7 Sonnet’s potential to unintentionally facilitate biological weapons development.

With predictions that powerful AI systems could develop capabilities comparable to, or surpassing, those of Nobel Prize winners as soon as 2026, the time for action is now. Additionally, Anthropic warned of significant energy challenges ahead. Training a single advanced AI model could demand around five gigawatts of power by 2027. To meet this rising energy need, the company is urging the U.S. administration to set an ambitious target of adding 50 gigawatts of power specifically for the AI industry over the next three years.

Ignoring these energy requirements may compel U.S. AI developers to move their operations abroad, shifting the heart of America’s AI economy into the hands of foreign competitors.

Classified Communication: A Proposed Security Framework

In response to ongoing national security challenges, Anthropic CEO Dario Amodei has recommended the establishment of classified communication channels between the U.S. government, AI companies, and intelligence agencies. He emphasizes the necessity of sharing security information to prevent the misuse of powerful AI systems, which could otherwise be co-opted for cyberattacks or used to control essential physical systems like laboratory equipment and manufacturing tools.

Implications for Organizations

As Anthropic pushes for tighter AI security measures, organizations must grasp the severity of the situation. The increasing risk of adversarial access to high-performance AI systems should lead businesses to adopt proactive defenses without delay. By preparing for heightened security protocols now, companies can prevent costly disruptions and protect their infrastructures in the future.

In summary, the urgent need for AI security cannot be overstated. As we stand at the crossroads of innovation and potential peril, the actions taken today will shape the landscape of AI for generations to come. The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.