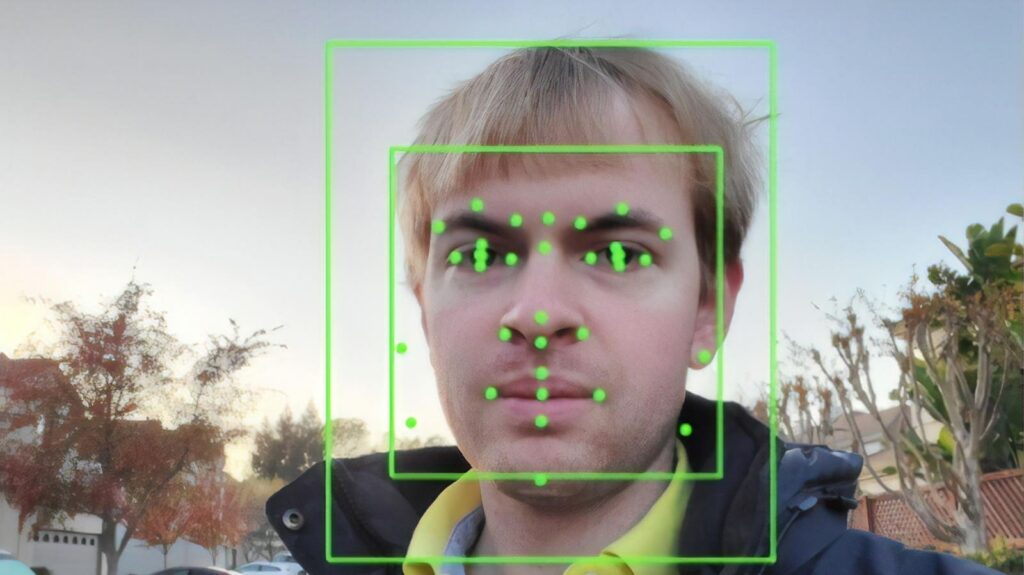

Output of a facial recognition system.

Getty Images

In a bold move to enhance its surveillance reach, facial recognition company Clearview AI sought to acquire a staggering number of arrest records—nearly 690 million—to enrich its controversial database, as revealed through documents analyzed by 404 Media. This acquisition included sensitive personal information like social security numbers and mugshots, raising significant privacy concerns.

Already infamous for harvesting over 50 billion facial images from social media, Clearview signed a contract in mid-2019 with Investigative Consultant, Inc. to strike this deal. “The contract indicates that Clearview aimed to collect social security numbers, email addresses, home addresses, and more,” pointed out Jeramie Scott, Senior Counsel at the Electronic Privacy Information Center (EPIC).

However, the ambitious plan met a roadblock and devolved into legal disputes, following Clearview’s claim that the initial data supplied was “unusable,” after paying $750,000 for it. Even with an arbitrator ruling in Clearview’s favor in December 2023, the company struggles to reclaim its investment and is now pursuing a court order to enforce the arbitration award.

Facial Recognition: The Ethical Dilemma

Privacy advocates express serious concerns about the implications of combining facial recognition technology with law enforcement records. Scott stresses that linking individuals to mugshots can exacerbate biases in human reviews, particularly because people of color are disproportionately represented in the criminal justice system. Research shows that facial recognition systems frequently misidentify individuals with darker skin tones. This leads to dire consequences, including wrongful arrests stemming from unreliable technology.

As a digital forensics expert, I’ve personally witnessed the pitfalls of facial recognition. In one case, authorities accused a defendant of a felony based solely on a facial recognition match from surveillance footage. My investigation within this case uncovered evidence proving the defendant’s innocence—cell phone data indicated he was miles away from the crime scene during the incident. The traumatic experience not only impacted the defendant but also highlighted the alarming reliance on flawed technology, with investigators too quick to accept the facial recognition results without corroborating evidence.

These instances show the potential hazards of excessive trust in surveillance tools within our justice system. Clearview’s ambition to acquire larger databases only raises the stakes, risking further harm to innocent individuals.

Clearview’s Legal Woes

Clearview AI is confronting a series of spiraling legal challenges worldwide. Recently, the company managed to fend off a £7.5 million fine from the UK’s Information Commissioner’s Office, successfully claiming it was outside UK jurisdiction. Despite this victory, Clearview remains embroiled in broader regulatory skirmishes, with several international regulators imposing hefty fines for privacy violations. The firm has also approved a settlement that results in it relinquishing nearly a quarter of its ownership due to alleged infractions of biometric privacy laws.

Understanding Clearview’s Business Model

Clearview AI distinguishes itself in the facial recognition landscape, primarily catering to law enforcement agencies by monetizing access to its technology. It claims to have aided in solving a variety of serious crimes, including homicides and fraud. Unlike conventional competitors like NEC and Idemia, Clearview has drawn heightened scrutiny due to its aggressive tactics of harvesting images from social media without users’ consent.

The ongoing revelations about Clearview’s intentions arise amid increasing pressure for the facial recognition industry to enhance regulation and transparency. As this powerful technology expands its influence in law enforcement and security sectors, fundamental questions about privacy, consent, and algorithmic bias become increasingly urgent in public discussions.

Just a note: The cases cited here are based on real events, but specific details, such as names and dates, have been altered to ensure client confidentiality while preserving vital legal and investigative aspects.

404 Media attempted to reach out to both ICI and Clearview for comments but received no replies. Similarly, I’ve also sought their feedback and will update this article should I receive a response.

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.