Cloudera Launches AI Inference to Revolutionize Generative AI

Cloudera has hit the ground running with the launch of Cloudera AI Inference, a cutting-edge solution powered by NVIDIA NIM microservices, which is part of the NVIDIA AI Enterprise platform. This new service is set to transform the way enterprises deploy and manage large-scale AI models, paving the way for generative AI (GenAI) to move from pilot projects to full-blown production.

A Safe Haven for Sensitive Data

One of the standout features of Cloudera AI Inference is its robust approach to data privacy. Businesses often face the daunting task of protecting sensitive information from leaking to non-secure, vendor-hosted AI model services. Cloudera’s answer is a secure development and deployment framework that remains entirely within the control of the enterprise. By harnessing NVIDIA’s advanced technology, organizations can build trusted data systems that fuel trusted AI. This means enterprises can create powerful AI-driven chatbots, virtual assistants, and applications that are set to significantly enhance productivity and unlock new business growth opportunities.

Speed and Efficiency at Scale

What makes this service even more compelling is the opportunity for developers to build, customize, and deploy enterprise-grade large language models (LLMs) with astounding speed. Imagine achieving performance improvements of up to 36 times faster using NVIDIA Tensor Core GPUs compared to traditional CPU setups—nearly four times the throughput! Cloudera has integrated user-friendly interfaces and APIs directly with NVIDIA NIM microservices, eliminating the often-frustrating need for command-line interfaces (CLI) and separate monitoring systems.

Key Features:

- Advanced AI Capabilities: Optimize open-source LLMs like LLama and Mistral for the latest advancements in natural language processing (NLP) and computer vision.

- Hybrid Cloud & Privacy: Execute workloads on-premises or in the cloud, featuring Virtual Private Cloud (VPC) deployments for additional security and compliance.

- Scalability & Monitoring: Benefit from auto-scaling, high availability, and real-time performance monitoring to quickly detect and rectify issues.

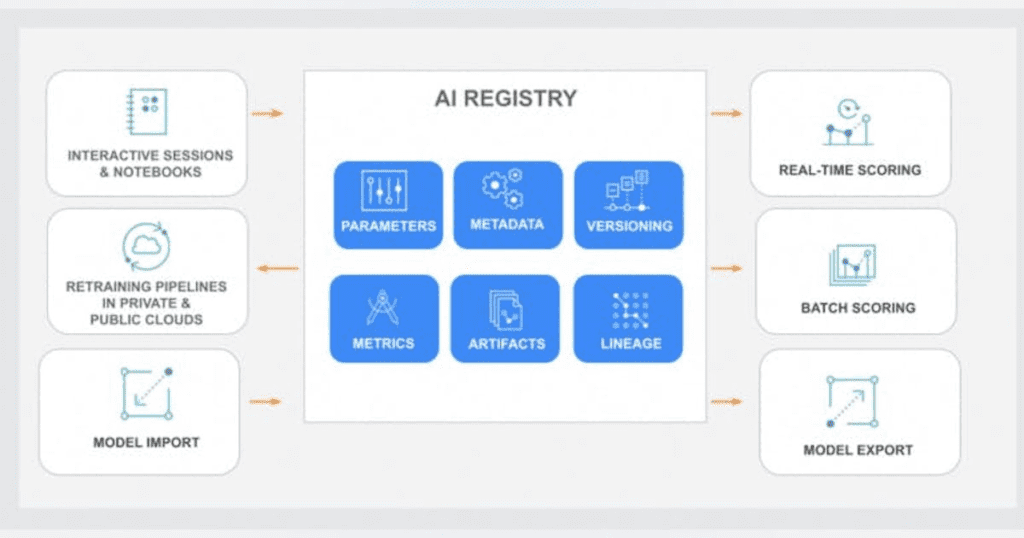

- Open APIs & CI/CD Integration: Utilize standards-compliant APIs for smooth model deployment and management, integrating seamlessly with CI/CD pipelines and MLOps workflows.

- Enterprise Security: Control model access through robust service accounts, access management, lineage tracking, and auditing features.

- Risk-Managed Deployment: Perform A/B testing and controlled model rollouts to manage updates safely.

Bridging the Gap

“Enterprises are eager to invest in GenAI, but it requires not only scalable data but also secure, compliant, and well-governed data,” says industry expert Sanjeev Mohan. He adds that the complexity introduced by private production of AI solutions makes traditional do-it-yourself (DIY) approaches ineffective. Cloudera AI Inference steps in to fill this void by blending advanced data management with NVIDIA’s AI capabilities, ensuring both the unlocking of data’s full potential and its safety.

A Game-Changing Collaboration

Dipto Chakravarty, Cloudera’s Chief Product Officer, expressed excitement over the collaboration with NVIDIA, emphasizing that Cloudera AI Inference offers a unified landscape for numerous AI and ML applications. This innovative platform allows businesses to create impactful AI applications and operate them seamlessly within Cloudera’s environment.

“With the integration of NVIDIA AI, Cloudera is committed to empowering smarter decision-making and building trusted AI apps with reliable data at scale,” he stated.

Kari Briski, NVIDIA’s vice president of AI software, echoed this sentiment, emphasizing the need for enterprises to seamlessly weave generative AI into their existing data frameworks to achieve tangible business outcomes.

Looking Ahead

These groundbreaking features will be unveiled at Cloudera’s upcoming AI and data conference, Cloudera EVOLVE NY, on October 10.

As generative AI continues to evolve, Cloudera AI Inference stands as a testament to the potential of marrying innovative technology with enterprise needs. The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.