Common Mistakes in Machine Learning: A Deep Dive

Welcome back to our ongoing series exploring the hidden pitfalls in machine learning workflows! If you’ve been following along, you know we’re focusing on those sneaky errors that don’t always catch our eye but can seriously impact the performance of our models.

In our first installment, we tackled some common missteps, such as the misuse of numerical identifiers, mishandling data splits, and the dreaded overfitting to rare feature values. Today, we’re diving deeper into two critical topics that could make or break your machine learning efforts: the dangers of training with unavailable data at prediction time and the mixing of magic numbers with real numbers.

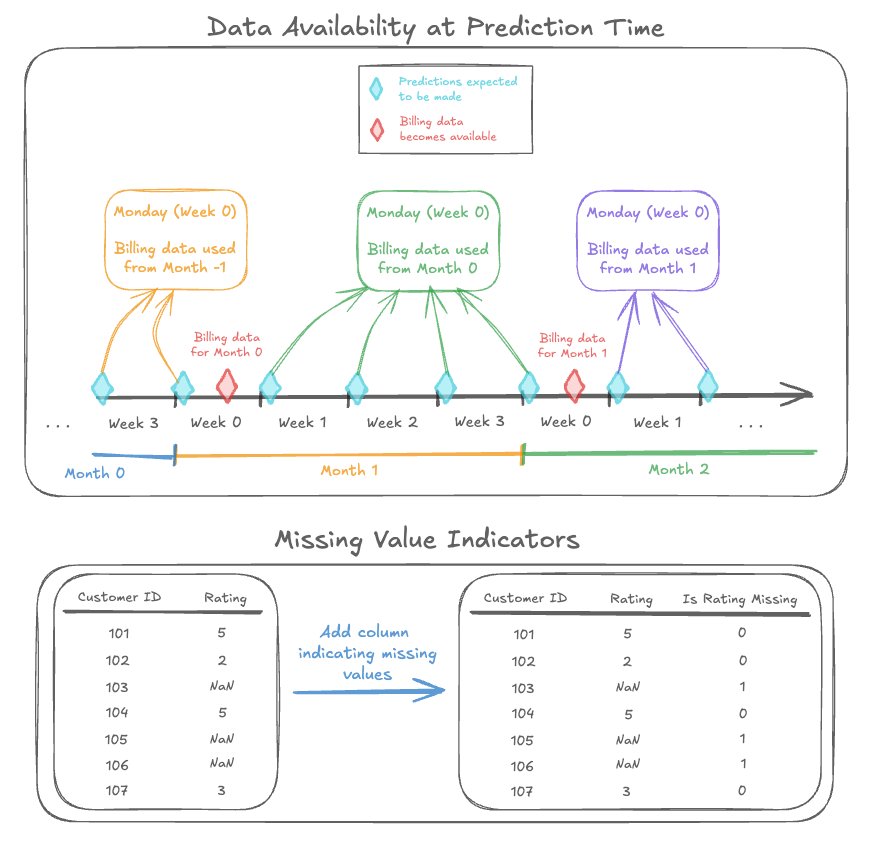

The Pitfall of Unavailable Data

Imagine you’ve built a model that’s performing well during training and validation phases, only to find it faltering in real-life applications. One major reason could be that during your training phase, you used data that won’t actually be present when the model is deployed.

For instance, consider a weather prediction model built using historical weather patterns that include a rare event like a solar eclipse. While this might enhance the model’s training set, in reality, the model will rarely encounter a solar eclipse during its actual deployment. By including such data, you could inadvertently teach your model to rely on conditions that are sporadic or, worse, completely unavailable when it needs to make a prediction.

The best practice here? Stick to the data that your model will realistically encounter in the field. This might mean scaling back on the diversity of certain features, but it’ll pay off in your model’s performance.

Mixing Magic Numbers with Real Numbers

Next on our agenda is the integration of magic numbers into our datasets. You might be wondering what a “magic number” is. In the world of machine learning, this refers to arbitrary values that developers insert into code or models without giving much thought to their impact. For example, if you’re normalizing data and you choose a scaling factor of 100 for one feature but 1,000 for another—all while not being clear on why those numbers were selected—you’re mixing magic and real numbers.

Why is this dangerous? It can lead to inconsistencies and unexpected behavior in your model’s predictions. Instead of relying on a mysterious magic number, use rigorous statistical methods to determine the best values for your features. A little mathematical insight goes a long way in ensuring that your model is sound.

Improve Your Machine Learning Game

By steering clear of these common mistakes, you can significantly enhance the robustness and reliability of your machine learning models. Here are some quick tips to implement right away:

- Validate Your Data: Always ensure the data you’re training on reflects the conditions the model will face once it’s deployed.

- Avoid Arbitrary Choices: When tuning your model’s parameters, seek out well-founded methods rather than relying on intuition alone.

- Continuous Learning: Keep updating your knowledge base. The world of AI is constantly evolving, with new strategies and techniques cropping up regularly.

As we optimize our machine learning processes, we set the stage for more accurate, effective, and reliable models.

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts!