Revolutionizing Healthcare with LLM-as-a-Judge: Evaluating Generative AI in Radiology

In our ongoing exploration of leveraging generative AI, we’re diving deeper into the world of healthcare applications. Previous posts discussed various methods like fine-tuning large language models (LLMs), prompt engineering, and the innovative approach of Retrieval Augmented Generation (RAG) using Amazon Bedrock. This time, we’re excited to introduce a groundbreaking evaluation framework called LLM-as-a-Judge, specifically tailored for the unique challenges of medical applications.

The Evolution of RAG in Healthcare

Part 1 of our series focused on model fine-tuning, while Part 2 brought RAG into the spotlight. RAG connects LLMs with external knowledge bases to enhance accuracy and reduce hallucinations—particularly vital in medical settings where precise information is paramount. Despite using traditional metrics like ROUGE scores for evaluation, we realized these metrics fell short in assessing the true integration of retrieved medical knowledge.

Introducing LLM-as-a-Judge

In Part 3, we showcase LLM-as-a-Judge, an innovative evaluation method in healthcare RAG applications using Amazon Bedrock. This framework tackles the complexities of medical content assessment, ensuring the accuracy of retrieved knowledge and the quality of generated text aligns with strict healthcare standards, including clarity, clinical relevance, and grammatical accuracy. By utilizing the latest models and the RAG evaluation feature from Amazon Bedrock, we can now thoroughly evaluate how these systems retrieve and utilize medical information to produce reliable, contextually pertinent responses.

Workflow Overview

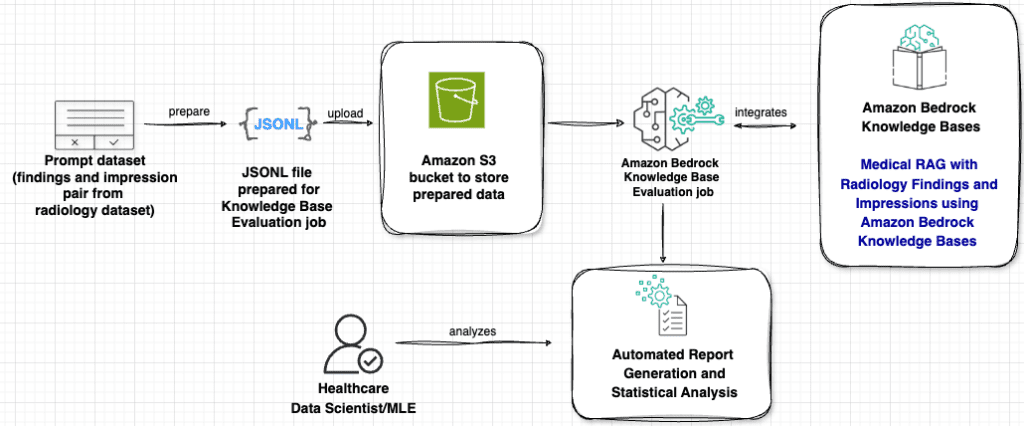

Our solution hinges on the evaluation capacities of Amazon Bedrock Knowledge Bases to optimize RAG applications, specifically focused on generating impressions from radiology report findings. The workflow encompasses several key phases:

-

Data Preparation: We begin with a prompt dataset of paired radiology findings and impressions, transforming this data into a structured JSONL format, essential for compatibility with the evaluation system. After formatting, the data is uploaded to an Amazon S3 bucket for secure access.

-

Evaluation Processing: At the heart of our solution is an Amazon Bedrock Knowledge Bases evaluation job. This job processes the prepared data and integrates seamlessly with the knowledge base, establishing specialized RAG capabilities tailored for radiology.

- Analysis: The final stage empowers healthcare data scientists with detailed analytical insights through automated report generation. This report illuminates metrics related to summarization tasks for impression generation, enabling comprehensive assessments of retrieval quality and generation accuracy.

Enhancing Dataset Validity

For our evaluations, we utilized the MIMIC Chest X-ray (MIMIC-CXR) database, a rich source consisting of 91,544 chest radiographs with free-text reports. By isolating 1,000 from a subset of these reports, we ensured that our evaluations remained focused yet comprehensive.

The Power of RAG with Amazon Bedrock

Utilizing Amazon Bedrock Knowledge Bases allows us to maximize the potential of RAG. By enriching queries with context from the knowledge base, we generated impressions from the findings of radiology reports, employing a structured knowledge base of findings organized as {prompt, completion} pairs.

The LLM-as-a-Judge Framework

LLM-as-a-Judge provides an advanced evaluation framework that goes beyond traditional metrics to assess generated content across five critical dimensions:

- Correctness: Graded on a 3-point scale, this measures factual accuracy against ground-truth responses.

- Completeness: Using a 5-point scale, it evaluates whether responses fully address the prompt.

- Helpfulness: This 7-point scale assesses the utility of the response in clinical contexts.

- Logical Coherence: A 5-point scale checks for consistency and logical flow of medical reasoning.

- Faithfulness: Rated on a 5-point scale, it identifies potential hallucinations or inaccuracies not reflected in the provided prompt.

These metrics provide a standardized output, allowing consistent comparisons across different models. This comprehensive evaluation framework not only enhances the reliability of medical RAG systems but also offers insights for continuous improvement.

Setting Up the Evaluation

Setting up the evaluation involves various prerequisites, such as having the solution code, syncing the knowledge base, and converting test data into JSONL for RAG evaluation. The preparation includes writing scripts to transform records, ensuring each report meets established guidelines for generating high-quality impressions.

Running the RAG Evaluation Job

With the configurations established, we initiate the evaluation job via the Amazon Bedrock API, orchestrating a thorough analysis of the RAG system. The evaluation focuses on five key metrics, providing a multi-dimensional view of each model’s performance during the impression generation tasks.

Results and Insights

Our evaluations across different datasets showcased impressive scores—dev1 yielded correctness of 0.98 and logical coherence at 0.99, while dev2 scored equally high at 0.97 for correctness and 0.98 for coherence. These results affirm that our RAG system effectively retrieves and utilizes medical information, achieving high ratings in factual accuracy and logical consistency.

Detailed Metrics Analysis

The metrics analysis interface offers a vivid display of example conversations, tracing the journey from the initial prompt through to the final assessment. With insights into retrieved contexts, generated responses, and ground truths, this tool enables an in-depth understanding of the RAG system’s performance.

Conclusion

The implementation of LLM-as-a-Judge marks a significant leap forward in the realm of healthcare AI, enhancing the reliability and accuracy of generated medical content. The successful evaluation framework we’ve established using Amazon Bedrock underscores the importance of continuous assessment in medical applications, ultimately leading to improved patient care outcomes.

As more healthcare organizations refine these innovative tools, prioritizing accuracy and safety in AI applications remains essential.

Stay tuned as we continue to explore the forefront of AI advancements in healthcare!

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.