Unlocking the Mysteries of MIDI Files: Scores vs. Performances in Deep Learning

If you’re venturing into a deep learning project involving MIDI files, it’s crucial to understand the distinct categories of MIDI data—specifically MIDI scores and MIDI performances. This knowledge is essential for anyone navigating the complex landscape of music data, particularly for researchers in computer music who are drawn to the wealth of available datasets.

MIDI, or Musical Instrument Digital Interface, serves as a communication protocol that transmits messages between synthesizers. The core concept is straightforward: every time a note is played on a MIDI keyboard, a message is sent to indicate when the note is activated (note on) and when it is released (note off). This allows synthesizers on the receiving end to produce the desired sounds. However, MIDI encompasses a variety of information types, which leads us to the pivotal difference between scores and performances—neglecting this could result in wasted time or misinformed choices in training data and methodologies.

Understanding MIDI

MIDI files gather a series of such messages, timestamping each to reproduce a musical piece accurately. Besides the essential note-on and note-off messages, MIDI also includes a range of additional controller messages, such as those for pedal information. Visualization tools like pianorolls can display this information, but remember, a pianoroll is simply a representation, not a MIDI file itself.

How Are MIDI Files Created?

MIDI files can primarily be generated in two ways:

- Playing on a MIDI Instrument: This generates a MIDI performance.

- Manual Input: Writing the notes into a sequencer (like Reaper or Cubase) or using a musical notation editor (like MuseScore) results in a MIDI score.

Diving Deep: MIDI Performances vs. MIDI Scores

Let’s explore what each of these entails and their respective characteristics, focusing more on the information extracted from the files rather than the technical encoding.

MIDI Performances

A MIDI performance captures four types of essential information:

- Note Onset: When the note starts.

- Note Offset: When the note ends, allowing for note duration calculation (offset – onset).

- Note Pitch: Encoded as an integer from 0 to 127, representing the note’s frequency.

- Note Velocity: Indicates how forcefully the key was pressed, also encoded from 0 (silence) to 127 (maximum intensity).

Most MIDI performances are generated from piano performances, given that MIDI keyboards are the most common instruments. Notably, the Maestro dataset from Google Magenta stands out as one of the largest collections of human MIDI performances focused on classical piano music.

Key Characteristics

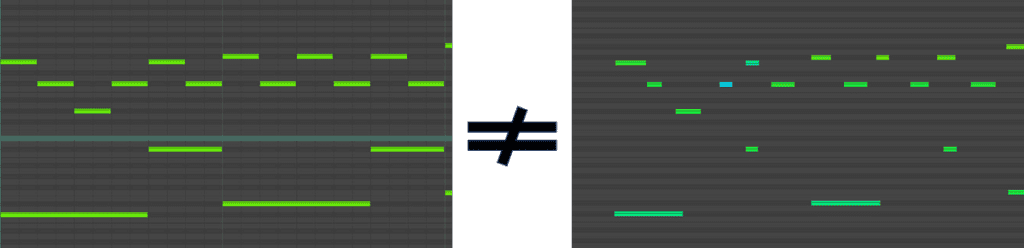

One fundamental property of MIDI performances is their inherent variation. In practice, musicians rarely press multiple notes with identical onset times, nor do they sustain the same note duration due to human limitations. This variability not only impacts the performances’ authenticity but also enriches the music’s expressive qualities.

Example with Python

To illustrate working with a MIDI performance, let’s leverage the ASAP dataset from GitHub and the Partitura library. Here is how you would access and print data from a MIDI file:

from pathlib import Path

import partitura as pt

# Set the path to the ASAP dataset

asap_basepath = Path('../asap-dataset/')

performance_path = Path("Bach/Prelude/bwv_848/Denisova06M.mid")

# Load the MIDI performance

performance = pt.load_performance_midi(asap_basepath / performance_path)

note_array = performance.note_array()

print("Numpy dtype:")

print(note_array.dtype)

print("First 10 notes:")

print(note_array[:10])The output will show the onset times, note pitches, and velocities, reflecting the nuanced timing characteristics of human playing.

MIDI Scores

In contrast, MIDI scores provide a richer array of information, including elements like time and key signatures, and they more closely resemble traditional sheet music. Within MIDI scores, onsets align neatly to a quantized grid determined by bar positions.

Key Characteristics

A defining feature of MIDI scores is their structured alignment to a time grid, permitting precise placement of notes on defined beats. The temporal units are often expressed as quarter notes or other musical divisions, reflecting their musical context rather than absolute time.

Example of MIDI Score

To access a MIDI score using Partitura, follow this process:

from pathlib import Path

import partitura as pt

# Set the path to the ASAP dataset

asap_basepath = Path('../asap-dataset/')

score_path = Path("Bach/Prelude/bwv_848/midi_score.mid")

# Load the MIDI score

score = pt.load_score_midi(asap_basepath / score_path)

note_array = score.note_array()

print("Numpy dtype:")

print(note_array.dtype)

print("First 10 notes:")

print(note_array[:10])The printed output will confirm the precise alignment of note onsets, indicative of the quantized nature of MIDI scores.

Choosing the Right Type for Your Project

Understanding the distinction between MIDI scores and performances is essential for developing effective deep learning systems:

- Opt for MIDI scores when working on music generation systems, due to their structured note representations and smaller vocabulary requirements.

- Utilize MIDI performances for applications that explore how humans play and interpret music, such as beat tracking and emotion recognition systems.

- Combine both for hybrid tasks like expressive performance generation or score following.

Conclusion

Navigating the world of MIDI files can be challenging due to the intricate layers of information they hold. With a firm grasp of the distinctions between MIDI scores and performances, you can strategically select the right data for your deep learning projects. Whether you’re aiming to develop generative models or performance-focused systems, being aware of these nuances will enhance your efficiency and outcomes. MIDI files offer a treasure trove of musical insights, and understanding how to leverage them effectively can lead to profound innovations in music technology.

By unpacking these complexities, you open the door to a world of creative possibilities in music and artificial intelligence, making it an exciting field to explore.