DeepSeek-R1: Revolutionizing Open-Source Reasoning Models

In recent weeks, DeepSeek-R1 has been creating quite a stir, consistently dominating AI news feeds as the first open-source reasoning model of its kind. And the excitement? Well-deserved.

Unpacking DeepSeek-R1

DeepSeek-R1 is not merely an additional entry in the generative AI space; it stands out as a groundbreaking reasoning model. Specifically designed to produce narrative text that reflects its decision-making and reasoning processes, it opens a plethora of possibilities for applications that demand structured and logic-driven outputs.

Moreover, the efficiency of DeepSeek-R1 is noteworthy. The expenses associated with training DeepSeek-R1 reportedly fall significantly below those incurred for state-of-the-art models like GPT-4o, thanks to the deployment of innovative reinforcement learning techniques from DeepSeek AI. With a fully open-source foundation, users retain greater flexibility and control over their data.

While its potential is remarkable, working with DeepSeek-R1 isn’t without challenges—anyone who’s tried deploying it can attest to the complexity involved. That’s where DataRobot is stepping up to streamline the development and deployment processes. By simplifying these phases, DataRobot allows developers to focus on creating genuine, enterprise-ready applications rather than getting bogged down in technical hurdles.

Why DeepSeek-R1 Stands Out

What truly makes DeepSeek-R1 compelling is its unique reasoning capability. Unlike most generative models, it generates outputs that mimic human thought processes, enabling applications where clear, logical reasoning is vital. For businesses and developers alike, this trait opens doors to more sophisticated, engaging AI solutions.

However, the journey with DeepSeek-R1 can be intricate. From integration challenges to performance variability, mastering its functionalities will take a keen understanding of its strengths and limitations. Navigating this landscape effectively can yield significant benefits, delivering richer user experiences.

Utilizing DeepSeek-R1 with DataRobot

Although DeepSeek-R1 boasts impressive features, tapping into its potential is where DataRobot really shines. Within the DataRobot platform, users can host DeepSeek-R1 using NVIDIA GPUs for superior performance or utilize serverless predictions for rapid prototyping and flexible deployment.

This means you can compare its performance against other models effortlessly, deploy it with enterprise-grade security, and craft AI applications that deliver meaningful, relevant outcomes—all without the struggle of complex infrastructure.

Real-World Applications: Performance Insights

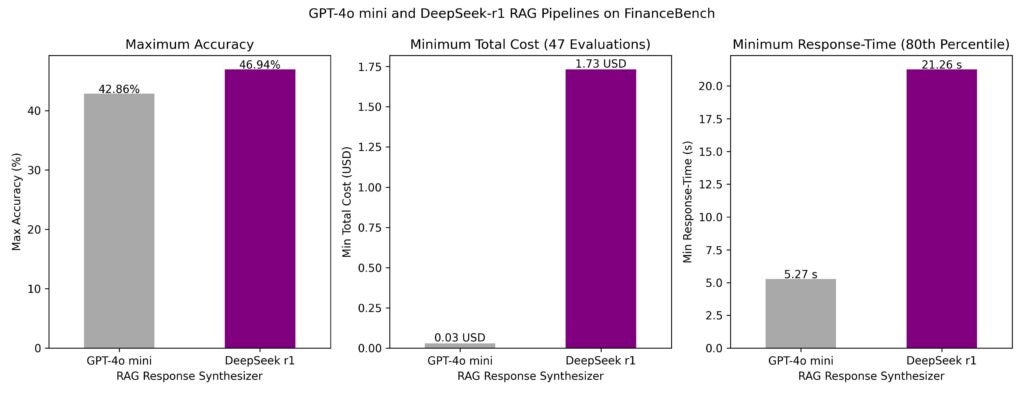

To see how DeepSeek-R1 performs practically, we evaluated it within multiple retrieval-augmented generation (RAG) pipelines using the widely recognized FinanceBench dataset, comparing it directly to the GPT-4o mini model.

Here’s a snapshot of the findings:

- Response Time: GPT-4o mini had a quicker response at 5 seconds, compared to DeepSeek-R1’s 21 seconds at the 80th percentile.

- Accuracy: When functioning as the synthesizer LLM, DeepSeek-R1 achieved 47% accuracy, surpassing GPT-4o mini’s 43%.

- Cost: Despite higher accuracy, DeepSeek-R1’s costs per call were significantly greater, approximately $1.73 per request, as compared to just $0.03 for GPT-4o mini.

DeepSeek-R1’s Reasoning Capabilities in Action

What sets DeepSeek-R1 apart is not just its ability to generate responses but how it reasons through intricate situations. This strength is incredibly beneficial for agent-based systems that must navigate dynamic, multi-layered challenges.

Take for example the query about the sudden impacts of a drop in atmospheric pressure. Instead of giving a simple answer, DeepSeek-R1 could analyze multiple angles, considering potential repercussions on wildlife, aviation, and even public health. This detail could empower agents to provide context-aware recommendations, like checking for flight delays due to weather issues. Other leading models may miss such nuanced impacts, demonstrating DeepSeek-R1’s unique advantage in agent-driven applications.

DeepSeek-R1 vs. GPT-4o Mini: What the Data Reveals

When determining a model’s readiness for deployment, it’s important to go beyond surface-level performance. We recently assessed DeepSeek-R1 alongside GPT-4o mini using the Google BoolQ reading comprehension dataset, illustrating that what sounds convincing isn’t always functional.

Our findings indicated that while DeepSeek-R1’s outputs showcased great reasoning capabilities, correctness scores varied across models. As reasoning was added to the responses, correctness diminished in some instances—a crucial reminder that deeper evaluations are essential to genuinely gauge a model’s readiness.

Hosting DeepSeek-R1 in DataRobot: A Quick Guide

Transitioning DeepSeek-R1 into operation via DataRobot can be straightforward. Whether you’re working with one of the base models or a fine-tuned variant, the pathway is clear:

- Start in the Model Workshop: Navigate to the “Registry” and find the “Model Workshop” tab.

- Add a New Model: Name your model and select “[GenAI] vLLM Inference Server.”

- Model Metadata Setup: Create a model-metadata.yaml file.

- Edit the Metadata: Paste values from a provided template.

- Configure Details: Input your Hugging Face token and model variant.

- Launch and Deploy: Save your settings, and your model will be live, ready for testing and integration.

Conclusion: Shaping the Future of AI

The evolution of generative AI technologies like DeepSeek-R1 is just beginning. By equipping yourself with the right tools and insights, you can harness these advancements effectively, assessing the specific models that best suit your needs.

DeepSeek-R1 exemplifies the potential of open-source reasoning models, and with DataRobot’s robust platform, deploying high-quality AI solutions becomes a reality.

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.