Think Twice: The Risks of Uploading Your Medical Data to AI Chatbots

Before you dive into your day, here’s a crucial reminder: be cautious about uploading your private medical information to AI chatbots.

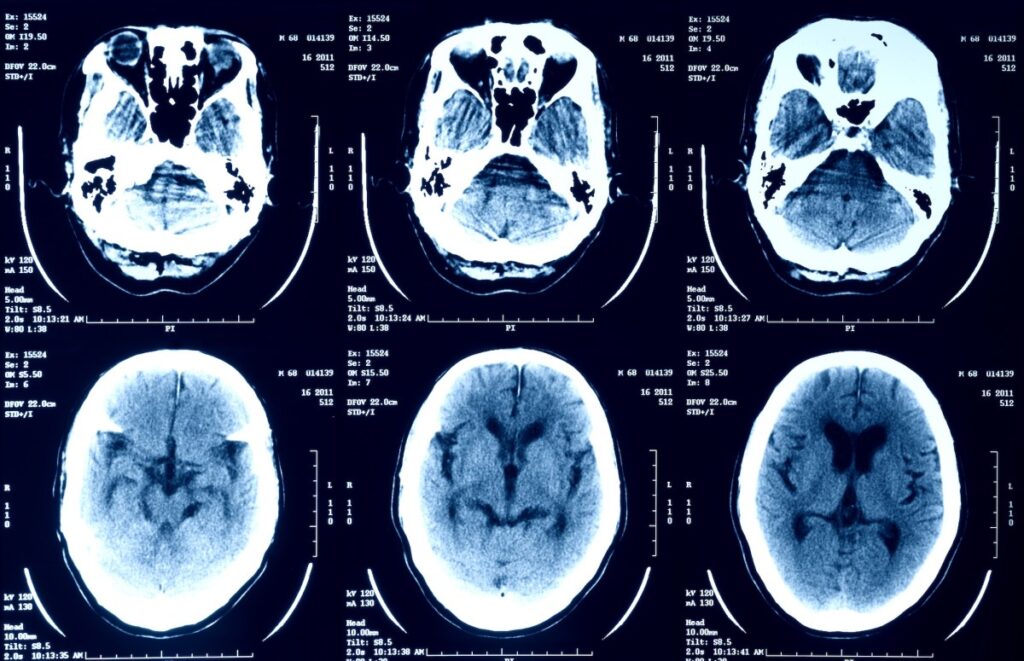

More and more people are turning to generative AI tools like OpenAI’s ChatGPT and Google’s Gemini for answers to their medical inquiries. Some have even relied on dubious apps designed to evaluate whether their genitals are disease-free. Recently, users on X (formerly known as Twitter) have been encouraged to upload their X-rays, MRIs, and PET scans to the AI chatbot Grok for interpretation. While this might sound convenient, the implications are significant.

The Special Nature of Medical Data

Medical data is categorized as sensitive information and comes with specific federal protections. In most cases, it’s only you who can decide what information to share. However, just because you can share your medical data doesn’t mean you should. Privacy advocates have long cautioned against the potential risks of uploading sensitive information. Once shared, your data could be used to train AI models, raising concerns about your privacy in the long run.

It’s important to understand that generative AI models thrive on the data they process. While the intention might be to enhance accuracy and performance, it remains unclear how companies handle the uploaded data or with whom it’s shared. Trust is the currency here, and unfortunately, it often comes without any guarantees.

The Risks You Might Not Consider

There have been alarming instances where individuals discovered their private medical records in AI training datasets. This not only raises questions of confidentiality but could potentially expose sensitive data to healthcare providers, future employers, or government agencies. Plus, keep in mind that many consumer apps that use AI don’t fall under the protective umbrella of HIPAA, which means your uploaded data might not have any legal protections at all.

Elon Musk, the owner of X, has encouraged users to share their medical imaging with Grok, claiming that while the outcomes are still “early stage,” the AI will improve and become remarkably accurate in interpreting medical scans. Yet, the cloud of uncertainty hangs over who exactly has access to the data collected by Grok. As the privacy policy states, X shares user information with an unspecified list of “related” companies, throwing a wrench into any expectations of data confidentiality.

What Does This Mean for You?

It’s a straightforward reality: once something is posted online, it rarely disappears. In this digital age, sharing private information can leave you vulnerable, even if you think you’ve made it private.

Here’s a real-world scenario: imagine a person sharing their MRI results with Grok out of curiosity, hoping for a quick assessment. Months down the line, that data could resurface in an unexpected manner—whether a potential employer doing background checks or an insurer determining coverage.

Conclusion: Proceed with Caution

Ultimately, while the allure of AI-driven insights can be enticing, it’s essential to weigh the risks associated with uploading your private medical data to online platforms. Protecting your privacy should come first, and having a solid understanding of how your data will be used is critical.

Stay informed, stay cautious, and remember that safeguarding your medical privacy is not just a trend—it’s a necessity.

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.