M5Stack Module LLM: An Offline AI Revolution for Smart Homes and Beyond

In an era where internet connectivity reigns supreme, M5Stack has rolled out a remarkable game-changer: the M5Stack Module LLM. This innovative device offers a smart solution for those yearning for AI capabilities without the tethering of an internet connection. Dubbed an “integrated offline Large Language Model (LLM) inference module,” it opens up a world of possibilities for applications in smart homes, voice assistants, and various industrial control systems.

Unpacking the Module

At the heart of the Module LLM is the Axera Tech AX630C SoC, boasting 4GB LPDDR4 memory and 32GB of storage. Featuring an impressive 3.2 TOPS (INT8) or 12.8 TOPS (INT4) NPU, the module ensures efficient performance while maintaining an average runtime power consumption of just 1.5W. This makes it perfect for long-term operations—ideal for users in both residential and industrial settings.

Equipped with a built-in microphone, speaker, USB OTG, and a microSD card slot (great for firmware updates), the module is remarkably versatile. Users can connect peripherals like cameras or debug tools, making it a robust addition to any tech lineup.

Comparing Offline Solutions

The launch of the M5Stack Module LLM positions it alongside other offline LLM solutions, including the SenseCAP Watcher and Radxa Fogwise Airbox. Its compatibility with M5Stack’s CoreMP135, CoreS3, and Core2 IoT controllers further extends its reach within the smart device ecosystem.

Technical Specifications

Here’s a quick look at the technical specs of the Module LLM:

- SoC: Axera Tech AX630C

- CPU: Dual-core Arm Cortex-A53 @ 1.2 GHz

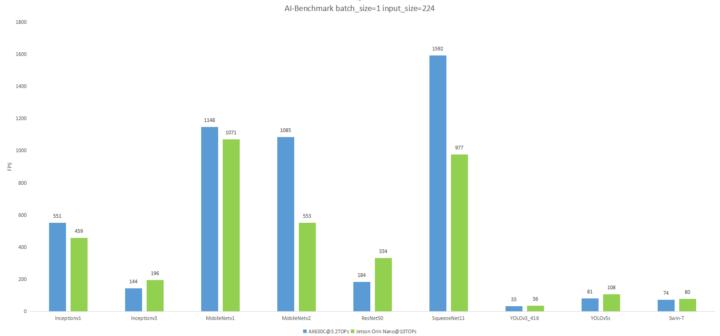

- NPU: 12.8 TOPS @ INT4, 3.2 TOPS @ INT8

- Memory: 4GB LPDDR4 RAM

- Storage: 32GB eMMC + microSD card slot

- Audio: Built-in mic, speaker with TTS and ASR capabilities

- Connectivity: USB OTG, UART, FPC for Ethernet

- Power Supply: 5V via USB-C

- Dimensions: 54 x 54 x 13 mm; Weight: 17.4g

Enhanced Features and Future Compatibility

Integrated with the StackFlow framework, the Module LLM is accessible for developers with experience in Arduino and UIFlow libraries. For the future, users can expect compatibility with advanced language models such as Qwen2.5-1.5B and InternVL2-1B, enhancing its capabilities in speech recognition and text-to-speech functions.

The inclusion of computer vision models like CLIP and YoloWorld, along with planned updates for models like DepthAnything and SegmentAnything, ensures that the device will remain at the forefront of AI technology.

Pricing and Availability

The Module LLM is currently priced at $49.90 on the M5Stack store, although it was out of stock at the time of writing. A debugging kit, which enables the addition of a 100 Mbps Ethernet port, is also available separately. Users can expect the Module LLM and its accessories to appear soon on platforms like Amazon and AliExpress.

Conclusion

The M5Stack Module LLM is not just another AI-powered gadget; it represents a shift towards offline, autonomous solutions that offer robust capabilities without the need for constant internet access. It’s perfect for tech enthusiasts eager to explore AI and IoT applications, all while maintaining user privacy and reliability.

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.