In an era where data breaches are becoming alarmingly common, Microsoft is stepping up its game with its Purview platform. Recently, the tech giant introduced AI features aimed at enhancing corporate data protection—particularly through quicker investigations after a security incident. This innovative tool is currently in public preview and promises to revolutionize how organizations tackle data security.

Showcased at a recent online security event, the Purview Data Security Investigations tool is a standout offering in Microsoft’s expanding security ecosystem. With attacks growing in sophistication, Microsoft is leveraging AI to counter threats, especially as malicious entities are also using AI to execute their plans. The new capabilities represent a concerted effort by Microsoft to shore up its security offerings amidst previous challenges in the realm of data protection.

This report dives into the features of Purview Data Security Investigations while also touching on other exciting AI enhancements, such as those focused on Entra identity management and Defender for AI services.

The Power of Purview

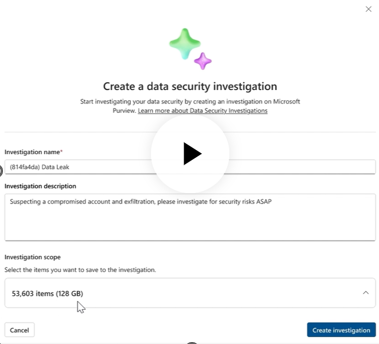

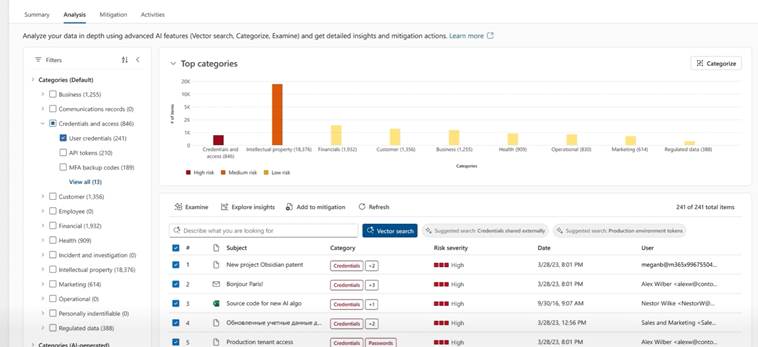

At its core, Purview Data Security Investigations employs AI to facilitate rapid, scalable analysis of data for security inquiries. Security admins can efficiently pre-scope their investigations by identifying impacted data, saving precious time. Rudra Mitra, corporate vice president at Microsoft Purview, illustrated this point during a demo, showcasing how over 50,000 events could be analyzed to pinpoint specific categories of interest, such as credential and access issues.

With a single click, administrators can access all relevant threat data—such as the 53,603 items linked to risky user activities. This automation not only speeds up investigations but also reduces manual workload.

The automatically generated reports provide admins with thorough overviews, including summaries of risks, suggested mitigation tactics, and the rationale behind the assessments made. Furthermore, the tool’s graphical interface helps visualize connections between affected users and activities, uncovering additional potential concerns.

Tackling Shadow AI Risks

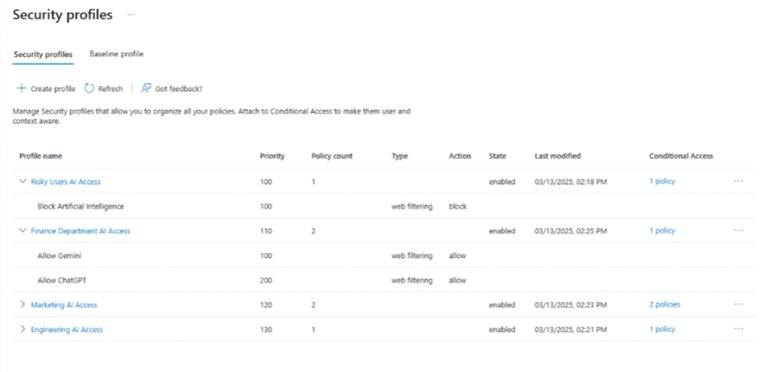

Microsoft’s executives recently highlighted an alarming trend in workplace technology: an astounding 78% of users have resorted to using their personal AI tools—those not approved or regulated by their companies. To combat this challenge, Microsoft is releasing new features in Entra access management and Purview designed to mitigate high-risk access and potential data leaks.

With new capabilities in Entra, admins can establish web filters that tailor access policies for different user roles. For instance, finance department employees might have distinct access policies from those in research roles, providing more tailored security measures.

In addition, Purview is enhancing Microsoft Edge for Business by rolling out new data security controls that help prevent unauthorized sharing of sensitive information with AI applications. The browser can now instantly detect and block sensitive data that may be accidentally uploaded or pasted into non-corporate AI apps, further safeguarding company data.

Securing AI Services

During the same event, Microsoft underlined the importance of securing not just data but the AI services hosted in cloud environments. New capabilities in Microsoft Defender help teams identify and mitigate risks rapidly while protecting various cloud-hosted AI applications. Rob Lefferts, corporate vice president of threat protection, emphasized the significance of this feature.

The company is extending its AI Security Posture Management functionality beyond Azure Open AI and AWS Bedrock to include Google Vertex AI models and an array of other models from the Azure AI foundry catalog. This ensures comprehensive management of AI security risks in multi-cloud and multi-model settings.

Insights from the Frontlines

Microsoft’s partners are already reaping the benefits of these innovations. For instance, St. Luke’s University Health Network shared how Microsoft Security Copilot streamlined their security operations. It’s helping the organization efficiently consolidate data from multiple sources, ensuring team members are well-informed about incidents right away.

Krista Arndt, associate Chief Information Security Officer, mentioned that the integration between Security Copilot and both Microsoft Defender and Microsoft Sentinel has significantly enriched context for alerts. David Finkelstein, chief information security officer at St. Luke’s, expressed appreciation for how the tool aids in strategy building and fills operational gaps, saying, “It’s almost like having an extra person.”

Don’t miss out: the upcoming AI Agent & Copilot Summit is your chance to explore opportunities and outcomes with Microsoft Copilot and AI agents. Mark your calendars for March 16-18, 2026, in San Diego!

As Microsoft continues to lead the charge in AI-driven security solutions, organizations can feel more empowered to protect their data. With tools like Purview Data Security Investigations, companies can uncover threats quickly and take proactive steps to safeguard their sensitive information. The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.