Nobel-Winning Physicist Raises Alarm Over AI: A Call for Caution and Understanding

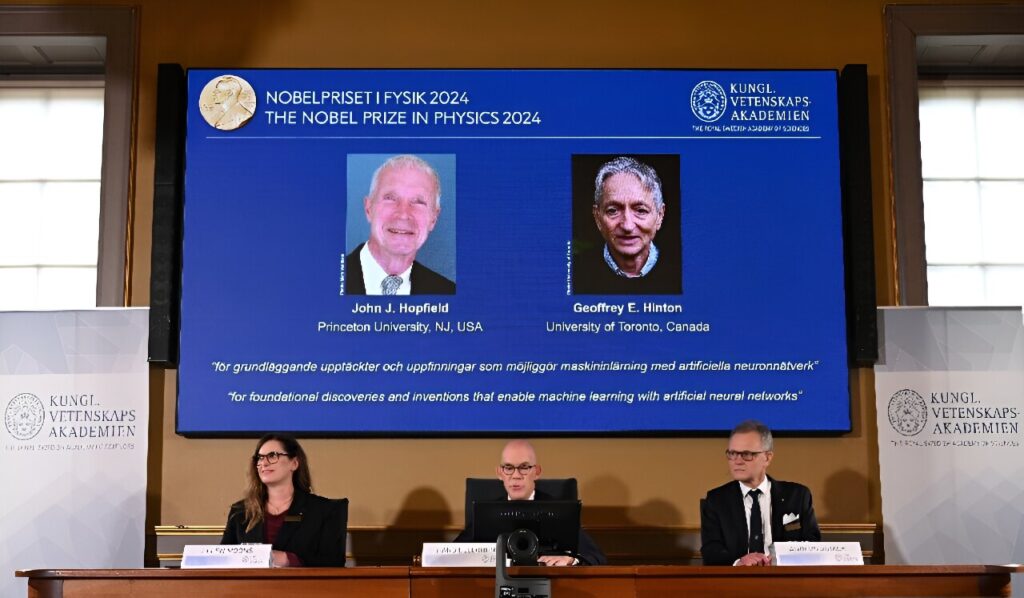

John Hopfield, the renowned professor emeritus from Princeton and co-winner of the 2024 Nobel Prize in Physics, recently expressed his deep concerns about the rapid advances in artificial intelligence (AI). During a virtual address from Britain to his colleagues in New Jersey, he emphasized the urgent need for better comprehension of deep-learning systems, warning that unchecked advancements could lead to catastrophic consequences.

A Call for Clarity in an Evolving Field

At 91 years old, Hopfield has witnessed the birth of two immensely powerful—yet potentially dangerous—technologies: biological engineering and nuclear physics. He noted the duality of technology, where innovations can possess both merit and menace. "One is accustomed to having technologies which are not singularly only good or only bad, but have capabilities in both directions," he said, illustrating the fine line humanity walks with such developments.

While AI continues to amaze with its capabilities, Hopfield pointed out the unsettling reality that many modern AI systems function without a complete understanding of their inner workings. "It’s very unnerving when you encounter something that has no control," he remarked. The fear of unpredictability looms large, with Hopfield and Hinton calling for a concerted effort to demystify AI and establish ethical guidelines to control its evolution.

The Legacy of Hopfield Networks

Hopfield is celebrated for developing the "Hopfield network," a theoretical construct that mimics how human brains process and recall memories. His work laid the groundwork for a significant leap in AI development, which was further advanced by Geoffrey Hinton. Hinton, often hailed as the "Godfather of AI," introduced the "Boltzmann machine", a model that introduced randomness into computational thoughts, which ultimately opened doors to modern AI applications that shape our daily lives today.

The Risk of Ignorance: A Fictional Analogy

Hopfield used a fictional scenario from Kurt Vonnegut’s iconic novel, "Cat’s Cradle," to illustrate his worries about AI. In the book, a man-made crystal known as "ice-nine" was intended to help soldiers handle muddy conditions, but it ultimately ended up freezing the world’s oceans, leading to civilization’s collapse. This cautionary tale serves as a stark reminder of how reckless innovation can spiral out of control when we lack understanding and oversight.

"I’m worried about anything that says… ‘I’m faster than you are, I’m bigger than you are… can you peacefully inhabit with me?’" Hopfield mused, encapsulating the anxiety many feel about the future of AI.

A Unified Approach for AI Safety

Both Hopfield and Hinton have emerged as advocates for AI safety, urging the scientific community to prioritize research in this critical area. "We urgently need more research," Hinton stated, stressing the importance of young scholars dedicating their efforts to understand AI’s implications and potential risks. They called on governments to compel large tech firms to provide the necessary resources for this vital research.

The Road Ahead

As we navigate the complexities of AI advancement, Hopfield’s insights remind us that knowledge is power. With profound questions about intelligence and control looming on the horizon, it’s essential for both researchers and the public to be engaged in discussions about the future of AI.

In an age where AI is evolving faster than we can fully comprehend, taking a step back to promote understanding, transparency, and safety is absolutely critical. The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.