Groundbreaking BCI Technology Reaches New Heights at UC San Francisco

Imagine a world where a paralyzed patient can think about moving their limbs, and a robotic arm mirrors that intent. Thanks to innovative work at UC San Francisco, this vision has become a reality. Researchers have unveiled a state-of-the-art brain-computer interface (BCI) that interprets brain signals, turning thoughts into motion commands with remarkable accuracy.

A Technological Leap Forward

Unlike previous BCIs, which typically lasted only two days before needing recalibration, this new device has impressively operated continuously for seven months without any significant interruption. This breakthrough heralds a new era in assistive technology for those with mobility issues.

At the heart of this innovation lies an advanced AI model that learns and adapts to the user’s brain activity over time. Neurologist Karunesh Ganguly highlighted the significance of this synergy between human thought and artificial intelligence: “This blending of learning between humans and AI is the next phase for these brain-computer interfaces,” he stated. “It’s what we need to achieve sophisticated, lifelike function.”

How It Works

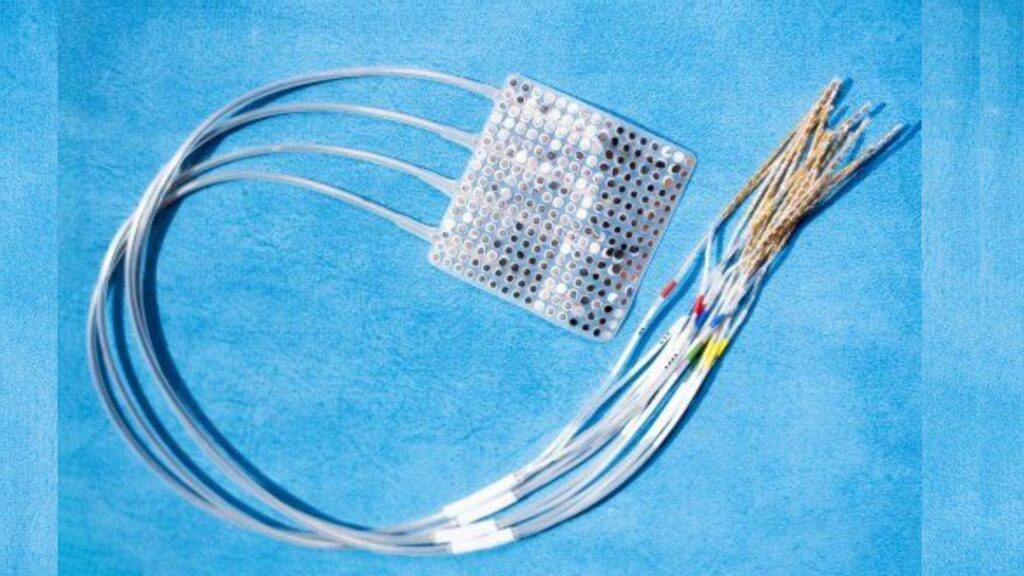

The BCI was tested on a study participant who became paralyzed after a stroke. Small sensors implanted on the brain’s surface detected its activity as the patient envisioned moving their limbs or head. Researchers observed that, while the basic movement patterns held steady, the precise locations of the brain’s activity fluctuated daily—an insight that explains why earlier BCIs lost effectiveness so quickly.

To combat this challenge, the research team engineered an AI model that seamlessly adjusted for these daily variations. For two weeks, the participant visualized simple movements while the AI learned from his brain activity. Initially, the control over a robotic arm was rough, but with practice using a virtual arm that provided immediate feedback, the user quickly gained precision.

After mastering movements with the virtual arm, the participant successfully maneuvered the real robotic arm, accomplishing tasks like grabbing blocks, rotating them, and even performing more complex actions like retrieving a cup and filling it with water.

Lasting Progress and Future Aspirations

Remarkably, even months later, the participant could use the robotic arm with just a quick 15-minute calibration session. Ganguly and his team are eager to enhance the AI further so that the robotic movements can become smoother and quicker. They also aim to test the system in a real-world home setting.

For individuals coping with paralysis, simple tasks such as bringing a drink to their lips or feeding themselves can be monumental hurdles. Ganguly expressed optimism: “I’m very confident that we’ve learned how to build the system now, and that we can make this work.”

The research findings have been published in the prestigious journal Cell, marking a significant milestone in the intersection of neuroscience and technology.

As we continue to explore these remarkable advancements, the possibilities for improving the quality of life for those with mobility challenges become more tangible. It’s a thrilling time for AI and medical innovation!

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.