Assessing Security for Generative AI-Powered Assistants: A Comprehensive Guide

In recent years, generative AI has captured the imagination of businesses and consumers alike. One of the most popular applications is the generative AI-powered assistant, a digital helper that can respond to inquiries, automate tasks, and even engage in casual conversation. However, before launching such applications into production, organizations must navigate a robust readiness assessment to safeguard performance and data integrity.

The Security Priority in Generative AI

Among the various concerns that come up during this readiness phase, security typically takes center stage. Without a clear understanding of potential security risks, organizations cannot effectively address them. This lack of clarity could potentially delay or even prevent the production deployment of a generative AI application. To illustrate this point, let’s explore a practical example of a generative AI assistant and how to evaluate its security posture using the OWASP Top 10 for Large Language Model (LLM) Applications.

Understanding Your Generative AI Application Scope

Before diving into security assessments, it’s essential to clarify where your application resides within the spectrum of managed vs. custom environments. For instance, AWS offers a Generative AI Scoping Framework to help identify what type of security controls apply to your application.

- Scope 1 (Consumer Apps): Public-facing applications such as ChatGPT, where most security measures are managed by the provider.

- Scope 4/5: Fully custom applications that you build, train, and secure yourself.

- Scope 3, which will be the focus of this article, often involves generative AI assistant applications that fall between these two extremes.

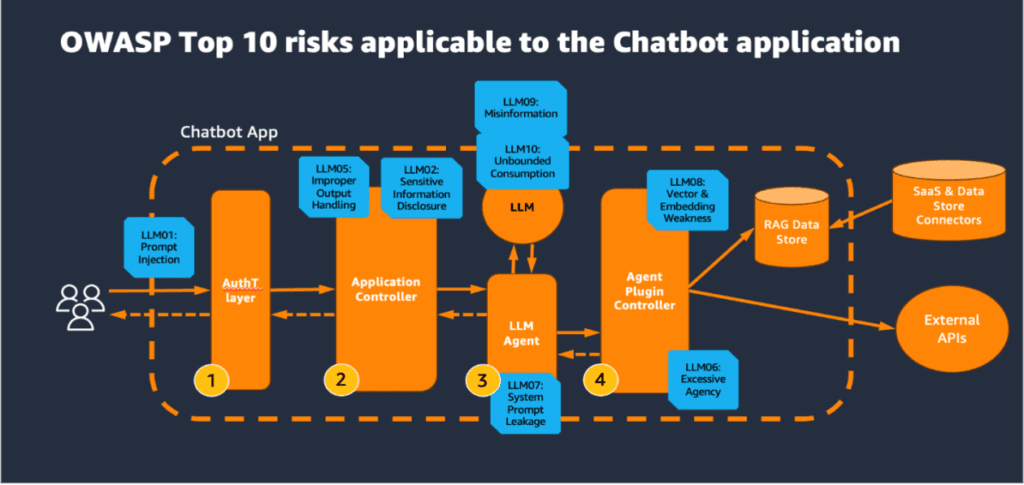

Leveraging the OWASP Top 10 for LLMs

The OWASP Top 10 is a well-established framework in the realm of application security that identifies the most prevalent vulnerabilities. For generative AI applications, there’s a tailored version specifically for LLMs, which we’ll use to understand potential threats and responses.

The Logical Architecture of a Generative AI Assistant

Let’s break down a typical generative AI assistant application’s architecture while overlaying the OWASP Top 10 for LLM risks. The user request usually journeys through several key components:

- Authentication Layer: Validates user identity, often facilitated through platforms like Amazon Cognito.

- Application Controller: Holds the core business logic and manages user requests and LLM responses.

- LLM and LLM Agent: The powerhouse of generative capabilities, dictating how user requests are processed.

- Agent Plugin Controller: Integrates various external APIs and services.

- RAG Data Store: Retrieves up-to-date knowledge securely from different data sources.

Security Mitigations Across Architectural Layers

-

Authentication Layer (Amazon Cognito)

Security threats like brute force attacks can surface at this layer. To combat these risks:- Use multi-factor authentication (MFA) and implement session management best practices.

- Deploy AWS WAF to filter incoming traffic, effectively blocking unwanted requests and preventing SQL injection and cross-site scripting attacks.

-

Application Controller Layer (LLM Orchestrator)

This layer faces risks such as prompt injection and improper output handling. Mitigations include:- Strict input validation and sanitization to prevent malicious inputs.

- Implementing Amazon Bedrock Guardrails to filter sensitive content before it reaches the LLM.

-

LLM and LLM Agent Layer

Here, DoS attacks may overwhelm resources:- Set maximum length for LLM requests and limit the number of queued actions to ensure optimal performance.

- Use content filters to manage the outputs generated by the LLM.

-

Agent Plugin Controller Layer

This layer integrates with third-party services and faces unique risks such as embedding weaknesses:- Apply least privilege access to AWS IAM roles and employ thorough user-level access control.

- Implement custom authorization logic in the action group Lambda function to restrict access effectively.

- RAG Data Store Layer

Responsible for acquiring and managing knowledge securely:- Encrypt data using AWS managed keys or customer-managed keys, ensuring optimal protection during data retrieval.

- Implement filtering policies to ensure that sensitive information remains protected.

Summary

In this guide, we explored the critical facets of securing a generative AI assistant application using AWS’s security framework and OWASP’s methodologies. By classifying your application effectively and implementing robust security measures, organizations can confidently deploy generative AI solutions while maintaining the integrity of their systems and user data.

As artificial intelligence continues to evolve, staying informed about emerging challenges and solutions is crucial. Ready to enhance your AI application’s security?

The AI Buzz Hub team is excited to see where these breakthroughs take us. Want to stay in the loop on all things AI? Subscribe to our newsletter or share this article with your fellow enthusiasts.